Sonically-enhanced gesture-based in-vehicle menu navigation

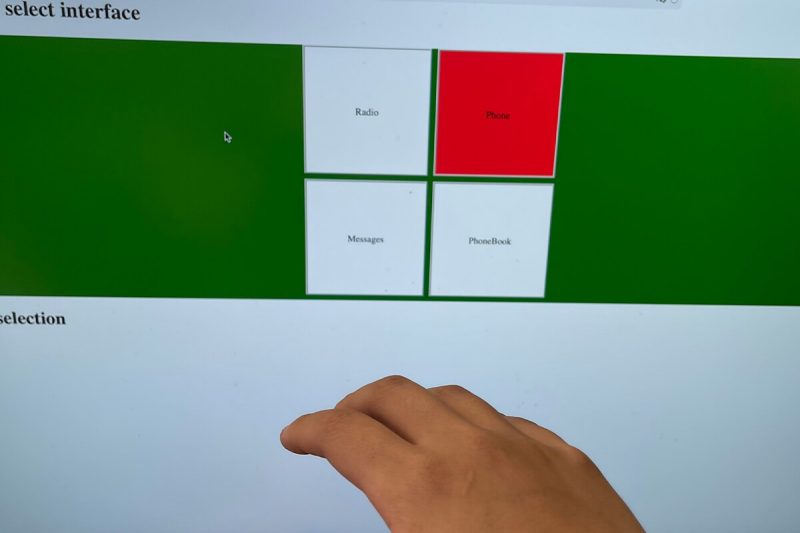

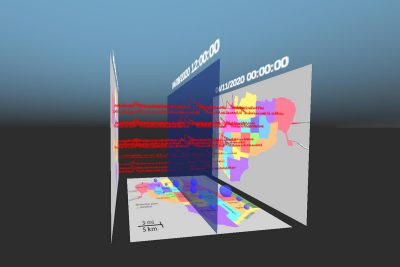

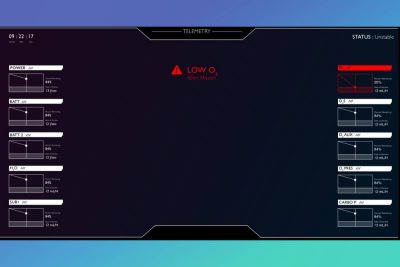

Competition for visual attention in vehicles has increased with the integration of novel touch-based infotainment systems, which has led to an increased crash risk. To mitigate this visual distraction, we have developed in-vehicle gesture-based menu systems with different auditory feedback types and hand-recognition systems. We developed a 3 page 2x2 menu system that is navigated by hand gestures. A leap motion was utilized as an air gesture recognition interface to capture the user’s hand movement and gesture. We are conducting an experiment using a driving simulator where the participant is asked to perform a secondary task of selecting a menu item. Three auditory feedback types are tested in addition to the baseline condition (no auditory feedback): auditory icons, earcons, and spearcons. For each type of auditory display, two hand-recognition systems are tested: fixed and adaptive. A fixed hand-recognition system requires that the drivers’ hand be in the middle of the leap motion tracking range to start recognizing the commanding gesture. An adaptive hand-recognition system can be triggered by having the driver’s hand anywhere in the leap motion tracking range. With the system we developed, we expect we can reduce the drivers’ secondary task workload, while keeping their attention on the road to ensure their safety.

PRESENTERS

- Yusheng Cao

- Moustafa Tabbarah

- Yi Liu

- Myounghoon Jeon

ORGANIZATION

Mind Music Machine lab

.jpg.transform/s-medium/image.jpg)

.jpg.transform/s-medium/image.jpg)

%20(1).jpg.transform/s-medium/image.jpg)

.jpg.transform/s-medium/image.jpg)