Tags

Results for: Human-computer interaction

Human-computer interaction

-

General Item

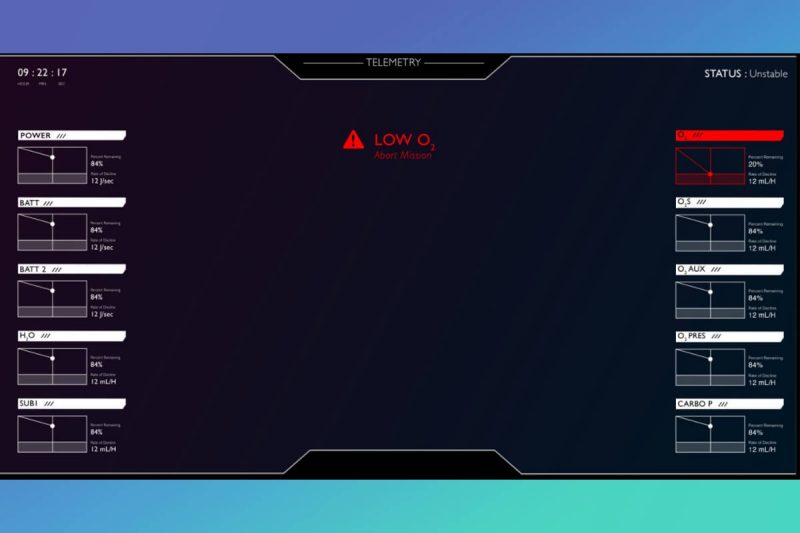

Hokienauts

HokienautsTeam Hokienauts at Virginia Tech is developing an augmented reality information display using the Microsoft HoloLens 2 in combination with various hardware components. Our design and implementation aims to assist crewmembers with navigation, geology sampling, suit vitals, and auxiliary conditions.

-

General Item

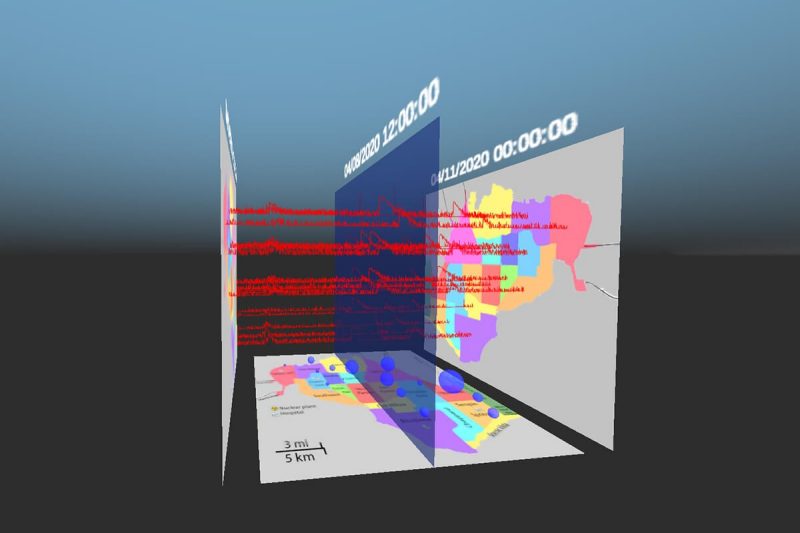

Immersive Analytics

Immersive AnalyticsImmersive analytics is an emerging field of data exploration and analysis in immersive environments. The main idea is to use visual analytics in a fully immersive 3D space. The availability of immersive extended reality systems has increased tremendously recently, but it is still not as widely used as conventional 2D displays. We describe an immersive analysis system for spatio-temporal data and compare its performance in an immersive environment and on a conventional 2D display.

-

General Item

In-vehicle intelligent voice agent: Speech style vs. embodiment, which matters more?

In-vehicle intelligent voice agent: Speech style vs. embodiment, which matters more?In a fully autonomous vehicle setting, we found that both the conversational speech style and the embodiment (i.e., using a robot) of the agent promoted drivers’ likability and perceived warmth, whereas conversational voice agents received higher anthropomorphism and animacy scores, and the robot agents received higher competent scores.

-

General Item

Music of the World

Music of the WorldMusic of the World is an interactive website that allows the user to listen to songs and learn about different countries by clicking on the country and hearing some of their most popular songs.

-

General Item

.jpg.transform/m-medium/image.jpg) Sonically-enhanced gesture-based in-vehicle menu navigation

Sonically-enhanced gesture-based in-vehicle menu navigationWe developed an in-vehicle gesture-based menu navigation system with three different auditory displays: auditory icons, earcons, and spearcons. We also developed two different hand-recognition systems: fixed and adaptive. We are conducting an experiment using a driving simulator where participants engage in menu navigation as a secondary task.

-

General Item

VR Haptic Glove

VR Haptic GloveVR Haptic Glove provides haptic feedback to users to increase the sense of presence, engagement, and usability in Virtual Environments (game or productivity).

-

General Item

%20(1).jpg.transform/m-medium/image.jpg) Visual Sensory Convergence

Visual Sensory ConvergenceThe immersive experience is designed to engage a single participant in a virtual ecosphere engaging all five senses. Characters are physical interactive props, have VIVE tracking, and are puppeteered by a team of live performers. Participants touch things that respond, smell things, and communicate through interactive sound.

-

General Item

.jpg.transform/m-medium/image.jpg) Visual Sound Scapes

Visual Sound ScapesVisualSoundScapes is an advanced 3D music visualizer in a virtual reality environment. Our project uses a fusion of advanced mathematics and music theory to extract various features from a sound file and create an immersive VR experience.

-

General Item

instAllTech - Online Installation and Assistance Guides for All Things Technology

instAllTech - Online Installation and Assistance Guides for All Things TechnologyinstAllTech is a single website for non-technical users to find tutorials and resources on installing and using various apps and software. Developed as part of a Human-Computer Interaction Capstone project, instAllTech focuses on addressing the difficult conversation of getting tech help from a distance.