Using Robotic Platforms

Current virtual reality devices can create very convincing visual and audio stimuli. However, the technology to fool other senses such as touch and smell is still not quite developed. The goal of this project is to investigate new ways to allow users to touch and manipulate virtual objects. Accurate haptics is an important contributor to immersion in virtual reality as it can increase the sense of presence and even the performance in certain types of tasks. We plan to develop a fun VR experience that will use robots to provide haptic feedback. This will also allow us to investigate how touch works together with vision to help us understand the world.

Midterm Report

The first part of the grant period was focused on creating scenarios and working on technical and design aspects. We looked into how our approach would work in two distinct scenarios: a creative, goal-directed virtual experience and a more free virtual architectural simulation. Along the semester, the project involved five student volunteers: Sara Saghafi (Architecture PhD), Nanlin Sun (Computer Science), Zenghdao Jiao (CMDA), Junyuan Pang (CMDA), and Mihn Tran (Mechanical Engineering). We successfully advanced both scenarios, concluding the semester with an implementation of the game scenario (exhibited during the science festival) and a detailed mechanical design of the robot extensions necessary to implement the second scenario. We expect to have a working implementation of both scenarios by the end of the grant period.

Main Accomplishments

- Design and implementation of a VR Sokoban Game

- Implementation of simple player prediction

- Demo of the VR Sokoban during Virginia Science Festival

- Exploration of the architectural design space

- Conceptual design of the architectural haptic system

- Mechanical design and stress analysis of the architectural haptic system

- ROS - Unity integration

The VR Sokoban Scenario

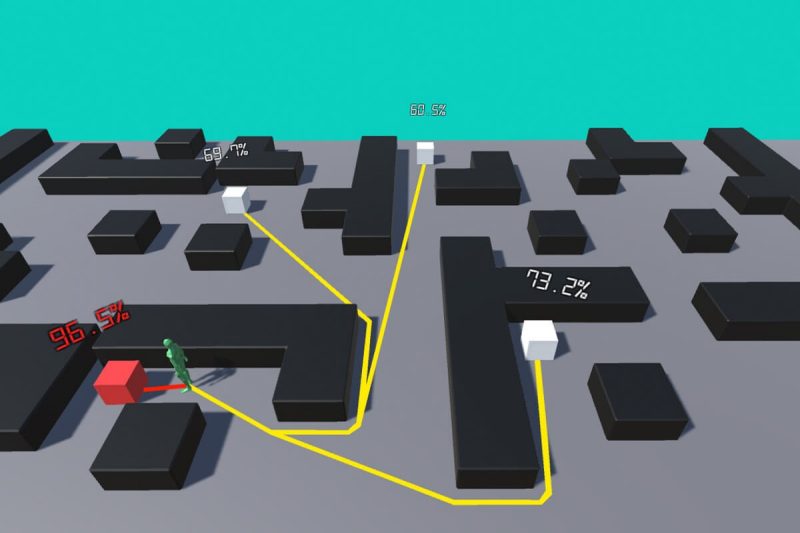

We started the project by looking into a scenario where interaction was limited and could be predicted to a certain extent. We decided to work in a VR version of the puzzle game Sokoban. The consists in pushing boxes in a maze to take them to their final positions. The challenge arises from the fact that the boxes can be only pushed and always move one square at time (Figure 1). Our goal was to allow the user to push a real object that behaved like a box in the game. The robot could then be used to simulate different boxes, one at a time. Since this is a game with clear goals, we also reasoned that it would be easier to predict which box the player would be engaging next.

Conversion to VR

Designing a VR version of Sokoban was challenging. Pushing is not a discrete action in real-life and we wanted to give players the feeling of being in complete control of the box. In addition, the character in the original game cannot reach more than one side at the time. In the VR game, unless the physical box is really large, the player can always reach the adjacent edges without having to move. Finally, robot has a finite speed, so placing boxes too close of each other cannot be allowed.

We went through many iterations, including placing the boxes far apart from each other and mechanisms to prevent simultaneous touching. However, we felt that these solutions were not satisfactory given that the boxes could really be moved anywhere. We explored, then, boxes that could disappear into holes, effectively locking them into specific places (Figure 2). Similarly, we tried to find ways to constrain how the box could be pushed around (Figure 4). Unfortunately, this solution made the action of pushing the box less intuitive. Our final solution for the Sokoban game was to design the levels that limited the access to the boxes. For the final design, we created a bank environment, where the goal is steal a cart containing full of money and gold bars. The scene contains two carts, one full and one empty. Initially, the player has only access to the empty cart. The empty cart must be pushed into a pressure sensor so that the laser detectors can be disabled. After that, the player gains access to the secure cart.

Implementation of simple player prediction

For the general case, we still needed the ability to move the robot to the right position in space. So, we investigated how to predict which box the user was about to touch. We started by looking into a simple distance function: the closer the box was, the higher the probability that the user could touch it. However that did not work well in the levels we designed. If a box is behind a wall, although it is physically close, it may be still far away from the player (or even inaccessible). The next step was to use a pathfinding algorithm to compute the probability as a function of how far an object is considering the possible paths that the player could go. This idea worked well and will be likely be used as one of the prediction factors.

Physical Construction

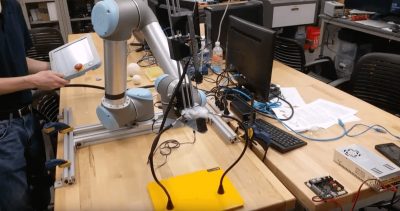

To give haptic feedback to the boxes used in the Sokoban game, we constructed a wooden box with the help of the School of Architecture wood shop. The box was then attached on the top of the robotic platform. We also swapped the original all-terrain wheels by Mecanum wheels so that the box could be pushed anywhere in space. The idea was to move the robot automatically between different boxes and let the user push it passively around during the game.

However, after the robot was turned on, we learned that the wheel drivers would not allow them to turn. As a result, the robot could not be pushed once it was energized. Unfortunately the emergency button did not disable the wheels as expected. To fully implement this scenario, we will need to either incorporate a pressure sensor on the box or find a way cut the power to the wheels from the robot operating system.

Science Festival

Our final bank scenario was exhibited during the 2019 Science Festival and attracted a wide audience. Due of the issue with the wheels drivers and safety concerns, we decided to run the scenario with the robot turned off. Instead, we moved the robot manually from one point to the other. Players were briefed on the premises of the game and how to use the empty cart to disable the laser sensors.

One of our goals for this semester was to use have our system integrated with the Qualisys tracking in the Perform studio. This would be the initial step to have it ready for Cube use. However, since the Perform Studio was unavailable during the festival, we decided to used the Vive’s lighthouse tracking system from Sandbox. Since we have been informed of the possibility of adding Vive tracking to the Cube, we will reconsider our initial plans of using Qualysis tracking.

Visitors considered our game really fun and kids would often throw themselves to the ground in order to avoid the laser sensors. We found that the box was heavy to be pushed by small kids and thus we provided some assistance during the demo. Since we needed a quick way to attach and detach the real box from the virtual box, we used a regular Vive controller as a tracker. In this way, the person conducting the demo could use the buttons to move the physical box without moving the virtual one. However, since it was not firmly secured to the box, we found that a mismatch between the prop would happen sometimes. In the future, we will use Vive trackers to create a more secure connection and control the attachment through the Unity application.

The Architecture Scenario

In our second scenario, the goal was to investigate opportunities to create physical architectural spaces with functionality. Instead of just looking around, we want to provide users affordances to functionally use the space.

Design Space

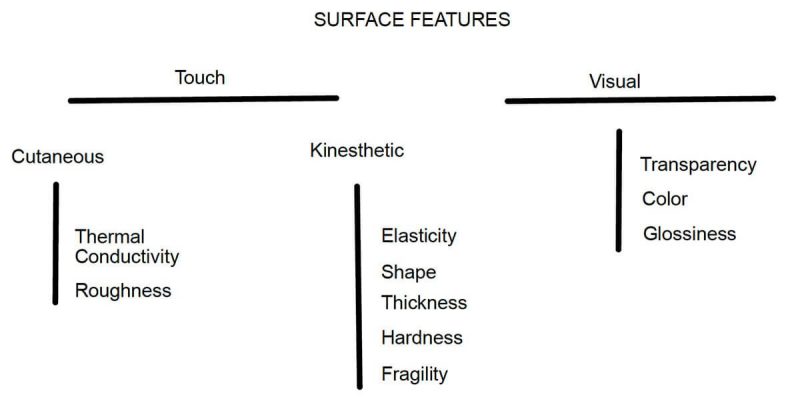

The exploration of the design space of tangibility in architecture started by considering the different surfaces that we interact with daily. We classified features into three main categories: visual, cutaneous touch and kinesthetic touch (Figure 8). We noticed that although materials are important, most features are useful because of its kinesthetic affordances.

Next, we concentrated on the way we interact with objects in space. By observing objects in living spaces, we selected a few objects of importance for haptic reproduction:

- Chairs

- Tables

- Doors

- Walls

Conceptual Design

Our goal was to find a design solution that could be mounted on our ground platform to make it capable of allowing user haptic interaction in a variety of scenarios. Because of the human use, objects have specific dimensional constraints. For example, tables and chairs are designed according to the proportion of torson and legs (Figure 9). The same is true for doors. Doors and walls are generally larger, so we focused on providing them at the positions in space where they would be more likely to be touched.

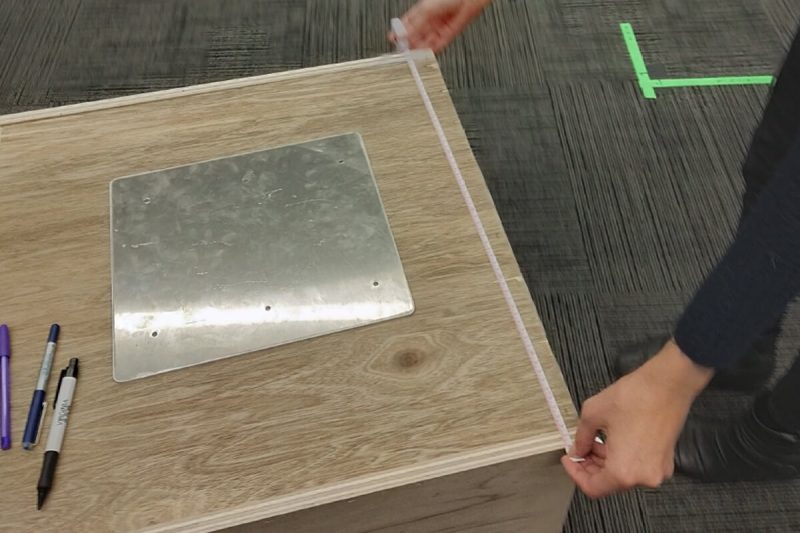

We approached the design using the idea of shape-shifting objects. Our aim was to provide as many surfaces as possible with minimum changes in the actual model geometry. We tried to achieve that by looking into placement and dimensions that were common to the objects we wanted to replicate. To test ideas we used drawings and small scale low fidelity prototypes (Figure 2).

The door was one objects most difficult to design. We wanted to reproduce not only the knobs and handles but also other parts of the door that we touch while opening and closing it. In addition, the edge of the door moves in a specific way when someone holds it. Our initial designs contained a small segment of the door attached to a hinge. However, we thought that this approach was too complex and affected the stability of the platform.

Our final design (Figure 11) has no hinges for the door. Instead, we plan to move the wall surface along a circular trajectory, so that the edge of the door moves in an appropriate way. It is not clear how much precision we need to make it work, so we expect that this prototype will be very useful.

Mechanical Design

Following our initial conceptual design we created a detailed mechanical design. We considered the exact dimensions, possible actuation mechanisms, and overall structural integrity. For the change between wall and table, our design uses two linear actuators. We also designed the chair surface to be retractable, so that it could provide enough support for sitting while minimizing interference with the robot movement (Figure 2).

System Integration

Even though the order for the ground platform was placed on May 20th, the robot only arrived on October 2nd. This left us with little time in the semester to work on the tasks related to system integration and robot control. However, we were still able to successfully integrate the game control in Unity to the platform control in ROS. Our initial evaluation seems to indicate that this architecture may lead to extra delays and instabilities in the robot positioning, in particular at faster speeds. Ideally, we would like to integrate the external tracking directly into the robot CPU. This will be further investigated during the next steps of the project.

Next Steps

In the second part of this project we intend to continue working on the two scenarios described. For the Sokoban scenario, that would involve finding a way to cut power to the robot wheels or redesign it to work around the issue. Also, for conducting user studies, we would like to instrument the robot with more emergency buttons. For the architecture scenario, we intend to implement our mechanical design and conduct test in a corresponding virtual environment. In both of them, more time will be dedicated to integrate actuation and external tracking, as well as implement basic trajectory and pose control algorithms.

ICAT 2019-2020 Major Sead Grant

-

-

ICAT Center

-

- ICAT Project

-

ICAT Project

-

-

Idea-Challenge

-

-

-

-

-

Research

-

-

-

Studios

-

-

General Item

The Cube

The CubeThe Cube is a highly adaptable space for research and experimentation in big data exploration, immersive environments, intimate performances, audio and visual installations, and experiential investigations of all types.

-

General Item

The Cube

The CubeThe Cube is a highly adaptable space for research and experimentation in big data exploration, immersive environments, intimate performances, audio and visual installations, and experiential investigations of all types.