Designing an imaging platform for 3D image generation

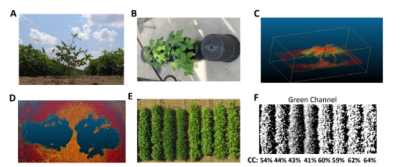

Plant phenomics is a research discipline focuses on accurate measurement of plant traits that are of agricultural and economical importance. This research discipline is the frontier of agriculture and plant research because the advancement of artificial intelligence and computer vision algorithms allows plant scientists to capture plant traits with unprecedented details. One branch of plant phenomics is field-scale high throughput phenotyping, which is playing an increasingly important role in smart farm technology and modern breeding programs. For example, constructing 3D models of plants growing in the field can provide crucial information regarding the architecture of plant shoot which can be used to design robotic harvesting machines. In faculty advisor Dr. Li’s group, the goal of field phenotyping is to understand the genetic control of pod locations in green soybeans (edamame) plants so that we can apply breeding and genetic approach to modify the plant architecture and to improve harvest efficiency.

Unlike phenotyping for potted plants in controlled environment, field scale phenotyping measures plant traits in real growth conditions and can provide more informative data for breeders and farmers. In this work, we are focusing on reconstructing 3D models of live plants in a field condition. Some recent researches have been done using structured light [Nguyen, 2015], depth 1 camera [Andujar, 2016], LiDAR [Selbeck, 2014] or drone imagery [Thorp, 2018]. These approaches provide different resolution and speed for image capturing and the cost of the imaging platforms are also very different. The first three approaches use ground based instruments which have higher resolution but slow in capturing data for individual plant. The drone based approach uses photogrammetry and is very fast, however, the resolution is limited due to the small number of drone images for each plant.

In this proposal, we plan to implement a new ground-based phenotyping device to collect image data for 3D model reconstruction using photogrammetry. One of the faculty advisors, Dr. Nathan Hall, has been applying photogrammetry to create 3D models of insect collection at Virginia Tech. This approach requires capturing hundreds of photos at different vantage points and stitch the photos together using structure from motion algorithms as implemented in software packages such as AgiSoft MetaShape. One of the time-limiting step of this approach is the image capturing process, which requires a person to manually capture photos by placing a sample in the middle of a turntable and change the angle of the camera for each shot. This process is labor intensive, typically requires hours to days of manual operation for one sample, and cannot be scaled to field applications. To eliminate this bottle neck in image capturing, we propose to automate this process using a robotic arm which automatically turns around the target to capture images for 3D reconstruction. This design will significantly reduce the time needed for capturing images from a few hours to within few minutes for each sample. We also plan to implement this device using multiple low cost cameras that are mounted at fixed locations on the robotic arm to provide images from multiple angles. Artificial illumination and low reflectance black curtain will be provided to avoid non-uniform reflection from the background. This device will provide significant speed improvement and convenience for field scale image acquisition to facility on-going field scale phenotyping experiments at faculty advisor Dr. Li’s group. If this prototype is successful, a miniature device will also be created for the faculty advisor, Dr. Hall’s group, to improve workflow efficiency for 3D model building of insect collection.

This project will utilize the knowledge of computer science, engineering and visual art to create a non-destructive plant phenotyping platform that will be deployed to create 3D images of living plants in the field. Since, this project aims to create the imaging platform to aid the plant phenomics, this platform could be commercially manufactured to aid the researchers and the farmers by removing the bottleneck of phenotyping. Students from ME and SPES are involved which will allow an interdisciplinary team to create a need-based imaging platform through collaboration. The project will take place at Smyth Hall and Newman Library using green house plants and will be tested at Kentland Farm for field applications.

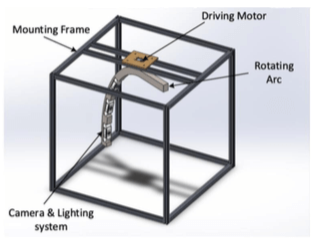

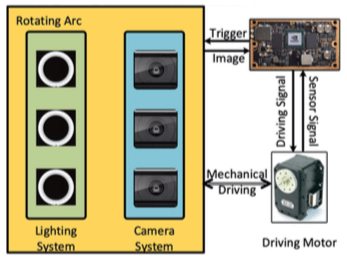

The mechanical design of the proposed platform consists of a mounting frame, a driving motor, a rotating arc and camera & lighting system, as shown in Fig. 1. The mounting frame provides a stable mounting references to other components. The driving motor is installed on the top center of the mounting frame. The other side of the driving motor is connected to a rotating arc. The motor is driven to rotate the rotating arc with respect to the mounting frame and the encoder embedded in the motor will provide the position feedback. On the rotating arc, there are camera and lighting system installed. The lighting system will provide a stable illumination background for the camera system to capture the object. During the scanning process, black backdrop can cover the mounting frame to prevent the background illumination being captured.

Considering portability of this field application, mechatronics design of the proposed system includes a portable and powerful on-board computer, a driving motor and camera & lighting system. Portable power source or battery can be designed and applied to power the whole system uninterruptedly. In the computer, a control and data collection program will be running to control the driving motor and lighting system, collect position data from motor encoders and images from camera system. The data from the position encoder and cameras will be stored along with a timestamp for the 3D modeling process in the next step.

This project aims to achieve to overall goal of developing the portable imaging platform that can help to create 3D models by capturing hundreds of images of a living plant rapidly at different vantage points. The major outputs of this project will be 3D models and the imaging platform. This will just be a framework and further funding will be required for higher quality cameras for better resolution images. After the objective of this platform i.e. to take the edamame plant images is achieved, this platform can be kept in the library or lab as per necessity and can also be taken to various exhibitions if asked or required.

Plan for Evaluating Project Success

The 3D models will serve as a tool to determine the success of this project. If we can create the 3D plant images and locate the pods in the plants using this platform, the result will prove this project as a successful project. We anticipate generating the 3D models using this platform that can help the plant breeders to take the phenotypic data easily. The main deliverables of the project will be computer vision and machine learning algorithms for the platform to collect phenotypic data in the field.

Timeline

September 20-October 15: Building the model October 15-October 31: Testing in the field November 1-December 11: Evaluating

Midterm report

Currently, Brandon Lester is helping us to buy and assemble the things needed to establish the platform. Until now, he has been able to order the 75% of other things and is working on and is going to order the rest of the things soon. He has already assembled the circuits and the frames.

Tags

- Agriculture

- College of Agriculture and Life Sciences

- College of Engineering

- College of Science

- Department of Mechanical Engineering

- Designing a Sustainable World

- Empowering Data

- Engineering

- Hailin Ren

- ICAT Project

- Jingyuan Qi

- Kshitiz Dhakal

- Life Sciences

- Mechanical Engineering

- Physics

- Qian Zhu

- School of Plant and Environment Science

- Science

- Student SEAD Grant