Mobile Learning

With rapid technological innovation, the consumption of mobile digital media has become ubiquitous in our daily lives. This project aims to integrate multiple approaches of diverse disciplines including (1) eye-tracking of media (PI Choi), (2) cognitive tasks (Co-PI Katz), (3) communication surveys (Co-PI Ivory) (4) big data and image analysis (Co-PI Morota) to systematically understand the use of mobile technology through the lens of human development. First, using a head-mounted eye-tracker, we will identify the attentional processes underlying the comprehension of mobile media content in different age groups (young children, young adults, and older adults). Next, we will measure individual differences in working memory to examine how individual differences in cognitive capacity may affect how people process and comprehend mobile media. Lastly, we will use image analysis techniques to automate the processing of eye-tracking data and develop an open access interactive graphical user interface to dynamically view and interpret a large number of eye and scene data files. Findings from these studies will advance our understanding of how certain media experiences may be beneficial or harmful in association with individual difference factors such as age or baseline cognitive ability.

Midterm Report

Major Activities

We have finalized 1) video stimuli, 2) comprehension questions and media survey questionnaires, 3) cognitive tasks, 4) eye-tracking research protocol, and 5) performed initial image analysis to estimate tablet coverage. Additionally, we have completed IRB submission, supervised graduate students, as well as recruited and trained 14-15 undergraduate research assistants for data collection. The students contributed to various aspects of research processes including video stimuli creation, survey and protocol development, image analysis, data-collection training, and public outreach event.

Video stimuli

We reviewed a body of literature on health-related messages in media focusing on healthy eating and exercise. We identified two television shows and developed two 10 minute video clips (child-directed and adult-directed) as video stimuli for the project. The child-directed video clip is comprised of multiple Sesame Street episodes on health. The adult-directed video clip is constructed from several of ER episodes around a teen male’s health. We reviewed these episodes to ensure that the content of the episodes is unlikely to be offensive or harmful for participants.

Comprehension questions and media survey questionnaires

We developed a list of questions to measure the comprehension of video content, including ordering, openended, and multiple-choice questions. Also, we developed survey questionnaires to measure participants’ screen media time and media multitasking.

Cognitive tasks

We finalized five cognitive tasks and created protocols: 1) Backward Corsi-Block Task (clicking on boxes in the reversed order), 2) Zoo Go/No-Go Task (pressing a blue key on a keyboard when they see orangutan helpers and a yellow key when you see the Zoo animals), 3) NIH Toolbox Flanker Inhibitory Control and Attention Test (choosing the button that matches the way a middle arrow is pointing), 4) NIH Toolbox Picture Vocabulary (selecting the picture that most closely matches the meaning of the word), 5) NIH Toolbox Dimensional Change Card Sort Test (matching a series of bivalent test pictures to the target pictures, first according to one dimension and then switching to another dimension)

Eye-tracking protocol and training

We finalized two 30-sec videos including 9-point dots for calibration and watched two 30-sec videos including a 4-point validation to be used to determine the accuracy of the calibration. We have tested the calibration video and finalized the research setting working with undergraduate assistants. We tested the set up while they were watching the two 10-min video clips (child-directed, adult-directed; order counterbalanced across participants) on a tablet either mounted on a table or allowed to freely move, and their eye-movement.

Recruitment materials and data collection training

We created recruitment materials including a flyer and a website (http://kchoi.org/participate/). In addition, all research assistants completed background checks and training for behavioral and eye-tracking data collection.

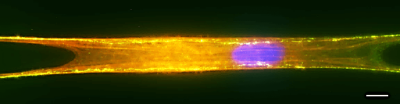

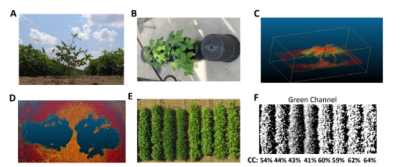

Image analysis

One of our main goals is to use image analysis techniques to automate the processing of eye-tracking data and develop an open access interactive graphical user interface to dynamically view and interpret a large number of eye and scene data files. While manual coding of eye-tracking videos can inform the location of a fixation, it does not account for the dynamics of viewer’s visual fields, such as how the size of screen changes over time (i.e., tablet coverage). The tablet coverage in the visual field may change from moment to moment and the change in tablet coverage may be influenced by age or media content. Examining the tablet coverage will reveal how viewers move their head and body and switch attention to objects in their visual field. Based on testing videos, we performed image analysis using Python to estimate tablet coverage. We first converted RGB images to grayscale to detect the edge of the tablet using the Canny edge detection method. This was followed by detecting the contours in the edge map and drawing a bounding box of contours. We then computed the midpoint of the bounding box and calculated the width and length. Tablet coverage was calculated as the sum of image pixels classified as tablet pixels.

Overall, we have made considerable progress in the first half of our grant. During this time, our team finalized the experimental protocol and completed eye-tracking and behavioral data collection training for all research assistants. Also, we have gains in the development of the image analysis software. Further, we have successfully conducted public outreach at the 2019 Virginia Tech Science Festival. Lastly, we funded one graduate and three undergraduate students during Summer 2019 as well as trained 14 undergraduate students.

Training and professional development took place for numerous students (see Participants). This training has been provided to 3 graduate students (1 summer funding) and 15 undergraduate research assistants (3 summer funding). These students have been involved in all phases of research, including design, piloting, and data collection training.

To disseminate information to non-academic audiences, we developed a website (http://kchoi.org/partcipate) for the research project to advertise and recruit research participants. This platform will be used to disseminate our findings. In addition, our research team presented an eye-tracking exhibit at the 2019 Virginia Tech Science Festival (November 19, 2019) as public outreach to introduce eye-tracking research to young children and their families (total 164 children and adults participated).

We have already completed developing a data collection protocol and training. We will recruit and run participants for the experiment in the next reporting period. Additionally, we will draft the initial results and submit an abstract for conference presentations (e.g., Cognitive Science Society, July 29 - August 1, Submission Date: February 3). For the online software tool, we will continue to refine the image analysis software and deploy our software as an online Shiny web server application. For public outreach, we will continue to create ways to connect and contribute to broader communities, such as presenting an eye-tracking exhibit during the ICAT’s Open (at the) Source exhibition in Spring 2020. Lastly, we will fund one additional graduate student and 3-5 undergraduate students as well as supervise students for poster presentations at the Dean Undergraduate Research and Creative Scholarship Conference.