Source Form

Source Form is a stand-alone device capable of collecting crowdsourced images of a user-defined object, stitching together available visual data (e.g., photos tagged with search term) through photogrammetry, creating watertight models from the resulting point cloud and 3D printing a physical form. This device works completely independent of subjective user input resulting in two possible outcomes:

- Produce iterative versions of a specific object (e.g., the Statue of Liberty) increasing in detail and accuracy over time as the collective dataset (e.g., uploaded images of the statue) grows.

- Produce democratized versions of common objects (e.g., an apple) by aggregating a spectrum of tagged image results.

This project demonstrates that an increase in readily available image data closes the gap between physical and digital perceptions of form through time. For example, when Source Form is asked to print the Statue of Liberty today and then print again 6 months from now, the later result will be more accurate and detailed than the previous version. As people continue to take pictures of the monument and upload them to social media, blogs and photo sharing sites, the database of images grows in quantity and quality. Because Source Form gathers a new dataset with each print, the resulting forms will always be evolving. The collection of prints the machine produces over time are cataloged and displayed in linear groupings, providing viewers an opportunity to see growth and change in physical space.

In addition to rendering change over time, a snapshot of a more common object’s web perception could be created. For example, when an image search for “apple” is performed, the results are a spectrum of condition and species from rotting crab apples to gleaming Granny-Smith’s. Source Form aggregates all of these images into one model and outputs the collective web presence of an “apple". Characteristics of the model are guided by the frequency and order in response to the image web search. The resulting democratized forms are emblematic of the web’s collective and popular perceptions.

Imaging Pipeline

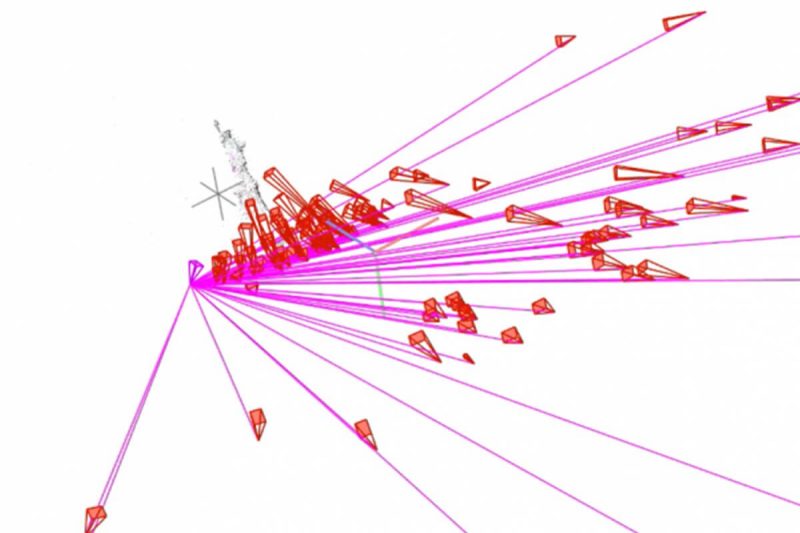

1.1 Image retrieval: The users provide inputs regarding a specific object they would like to construct. The software then automatically crawls the text-based image retrieval results. The search process allows users to provide feedbacks for disambiguating the targeted contents. The initially filtered images will then be used for reverse image search (content-based image retrieval) to obtain more and relevant images capturing the same object on the Internet.

1.2 Feature-based correspondence matching: This step involves extracting local image patches from images and matching them across the image collection. By establishing the correspondences among images, we are able to reliably remove images that do not contain the target object. This is important because the image collection from the image retrieval step can be very noisy. The main challenge in this step lies in matching image patches that were captured under significantly different settings, e.g., different viewpoints, lighting conditions, and exposure. We utilize robust feature extraction and matching algorithms for addressing this issue.

1.3 3D mesh reconstruction: Given the feature correspondences, we apply structure from motion and multi-view stereo techniques for jointly estimating the camera poses and the 3D structure of the points. We then construct a 3D mesh model based on the extracted 3D point cloud using software built on top of the work by COLMAP [2, 3, 1], which we use in multiple stages of the pipeline. The 3D mesh model will then be refined and processed for 3D printing.

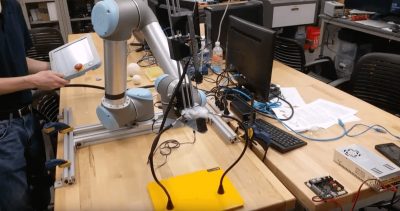

Printing Pipeline

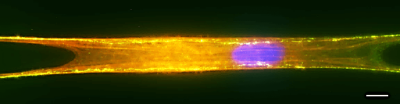

2.1 3D mesh repair: The reconstructed mesh is often a non- manifold surface geometry. To create printable features, the surface is thickened along its surface normals. This thickening is performed asymmetrically to preserve feature resolution on the exterior surface. The produced volumetric 3D model is then voxelized for printability analysis and fabrication. This component, which is built on recent results from a NSF-funded, multi-university research project on design for manufacturability (NSF CMMI #1546985), uses a voxel-based representation of part geometry to (i) highlight part features that will not be printed (due to their size) and (ii) to identify the print orientation that minimizes build time and support material consumption. The voxel-based geometry is then sliced into bitmap images of each voxel layer which can be fed to the printer.

2.2 3D printing: Models queried by users in the Source Form system vary in size, feature resolution and texture. Polymer-based additive processes such as material jetting and powder bed fusion were eliminated from the selection pool due to the high cost and form factor. Though material extrusion systems are relatively inexpensive, they were eliminated as a potential manufacturing solution because the process is relatively slow, cannot fabricate high resolution features, and the printed parts have poor surface finish, which could potentially hide the evolution of the model from the growing image dataset. Mask projection vat- photopolymerization (MPVP) can achieve high feature resolution with good surface finish. The designed MPVP system can handle a wide range of photopolymers, varying from rigid to flexible, to provide a representative and unique experience to the user. The bottom-up MPVP system is equipped with continuous projection and drawing to facilitate high-speed manufacturing, achieving manufacturing speeds in the range of 13-25 mm/min - making it best suited for the intended application.