Dancing Plants

World population is expected to reach 9.7 billion by 2050. Climate change is making the traditional agricultural practice increasingly difficult. To address the looming food crisis, we need to develop revolutionary agriculture techniques along with new crop plants that can double or triple the crop yields with less arable lands. Developing novel methods to improve plant health and production is currently highly demanded.

Plants are immobile and cannot escape from a harsh environment or any predators. Though plants cannot walk away, during the evolutionary process, most plants develop sophisticated mechanisms to move their roots, leaves, flowers, and stems in order to capture nutrient and sunlight, defend enemies, and acclimatize to harsh environment. Plants can also have micro-movement as a response to microbial pathogen and abiotic stress (salt, drought, light, pH, temperature etc.). The subtle micro-movement is difficult to be observed by naked eyes, but it can be recorded by video camera. The micro-movement patterns of plants grown under “healthy condition” could be different from those grown under “stressed conditions”. We propose to develop an image analysis system integrates with machine learning to interpret plant movement patterns under different growth conditions. The processed image data will be converted to audible sounds. We hypothesize that plants grown under stressed conditions will make “crying” motions, while the healthy plants will make “happy” motions, can be rendered audible through sonification. We will use such observations to inform and improve the algorithm that will drive the mimic sound, to treat plants, and thereby improve plant performance. This will result in a feedback loop between the plant motion and the ensuing sonic treatment.

In this project, four Virginia Tech faculty members and their research groups will combine their interdisciplinary expertise to develop an innovative approach to observe or listen to plants in response to various stress stimuli, and design new agricultural practice to improve plant performance.

Midterm Report

The goal of this project is to establish an imaging system that allows us to analyze pepper plant movement under different conditions. After processing data collected in controlled conditions with computer vision and machine learning algorithms, plant movement patterns will be sonified to generate audible sound, which can be used to monitor the plant growth and health conditions. The project was initiated in Fall 2019, and we have since held weekly meetings to discuss the project progress.

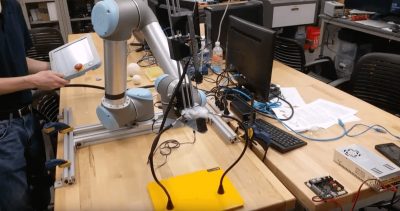

Over the fall semester, we have successfully established a prototype imaging system (Fig. 1). The system has an NVIDIA TX-2 micro-controller controlling lights and multiple embedded cameras, with remote control smart infrastructure to allow for automated lighting and environmental control. We have developed two kinds of light conditions. One with full-spectrum LED light, and another with mixed blue/red LED lights. We have also established a hydroponic system allowing us to grow pepper plants without soil. As shown in the attached video, we are now able to capture data monitoring plant movements under different light conditions.

Our team displayed the recent developments of our project at the Virginia Tech Science Festival on Nov. 16th, 2019 (Fig. 2). Several hundred visitors engaged with the project, and young attendees also tried to operate the system and learn about the image-making process. One VT faculty member from the industrial engineering department also showed great interest to develop collaborations in the future.

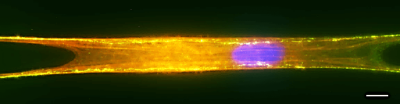

Currently, Co-PI Jia-Bin Huang has extracted dense motion between all consecutive frames in the video dataset (Fig 3). The motion analysis will be helpful to visualize the potentially imperceptible movement and sonification in the next project period.

With current preliminary data, we are also discussing the possibilities to write an NSF proposal in Spring 2020.

In the next project period, we will update the cameras with an Azure Kinect 3 camera depth capture system in order to document 3D growth cycles which will allow for new dimensions of data. We will also develop a set up for thermal camera documentation that can be used to monitor the temperature fluctuation on the leaf surface. The collected temperature patterns will also be analyzed, and we will develop methods for converting the temperature data to audible data.