Body, Full of Time

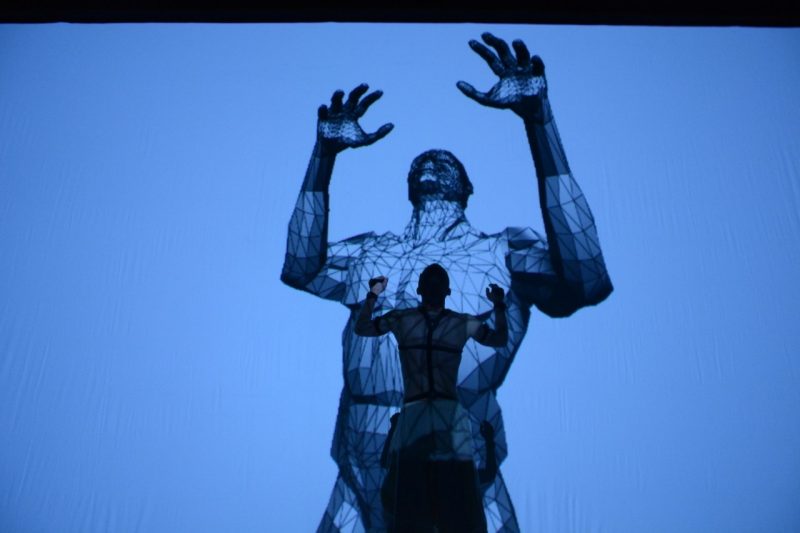

This new work of choreography in virtual reality allows audiences to experience a fully immersive movement-based performance in digital space. Taking viewers through a journey of three corporeally-inspired surrealistic spaces (designed and visualized by Zach Duer) populated by motion-captured avatar dancers (performed and choreographed by Scotty Hardwig), and featuring new musical compositions by Charles Nichols. This hybrid work of digital dance merges movement, sound, and visual art to push the boundaries of embodiment and physical performance in digital space.

Midterm Report

Conceptualization

We have conceptualized the art work and format to three movement form. We created an outline for Time Garden as a three movement work presented as an installation in a Quest VR headset. There are three distinct scenes, each of which will be installed on individual headsets so that the audience can choose which to wear, and for how long. Audience members will befree to view as many of the scenes as they choose, in any order, to experience different sections of the work and the different choreographic worlds that each contains. The three scenes are named by times of day, “Morning,” “Noon,” and “Night”, and are energetically and compositionally distinct. The headsets will be placed on a table, each accompanied by a printed image that indicates the names of the scenes and gestures toward the feeling and content within.

Each scene is made up entirely of animated bodies, constructed from the elementary anatomy of the human body (circulatory and lymphatic systems, geometric mesh skin, and 3D scans of the real performer’s body used as a landscape). At the intersection of physical and imagined virtual spaces, many hyperreal performance options become possible. Bodies and movements can be multiplied in the hundreds or thousands, and the performer’s body is fragmented in space. For this piece, we are primarily interested in the transference of the physical to the virtual, and what this creates for the performance and perception of an art form like dance.

Recorded motion capture

Using the SOPA dance studio, the Perception Neuron Hi5 Gloves, and the Perception Neuron Pro motion capture suit, we recorded performed movement for the first two scenes of the piece. This took place across a series of more than a half-dozen three+ hour sessions in which we conceptualized, rehearsed, and recorded the movements. We still need to record the third scene.

Motion capture pipeline

We created a pipeline for recording animation from the Perception Neuron Hi5 Gloves. These gloves were designed to stream real time data into Unity, and there is no current plugin to any standard recording software. We developed a system for recording the animation in Unity and exporting it. This left us with two separate motion capture exports for every recorded “take” of performed motion - one for the body and one for the hands. So, we also developed a system for combining the animation keys onto a single joint system and retargeting that animation onto our chosen avatar. It currently takes approximately an hour of labor to retarget a motion capture take onto the avatar. We are currently working on retargeting the motion capture from the second movement, which includes almost two dozen takes.

LIDAR scanning and reconstruction of Scotty’s Body

Working with Max Ofsa at the VT Newman Library, we conducted two scanning sessions of Scotty’s body, with the intention of using the scan data to create virtual environments comprised of his body. These sessions were conducted in August and September and we are still waiting for Max to send us the resulting data, despite numerous prompts.

Purchased Quest VR headsets

We purchased three Quest VR headsets and developed a pipeline for loading our Unity scenes onto them.

First Scene complete

We completed the first scene in Unity. It is a bright white virtual environment in a seemingly infinite space. There is a gridded floor, some abstract formal patterns in the sky, and a massive grid of avatars performing Scotty’s recorded movements. There are some minor tweaks to make, but it is more or less done. Significant work went into rebuilding the scene for the Quest, requiring a switch from deferred rendering to forward rendering. We also had to rebuild the avatars to include Level of Detail groups that reduce the geometric complexity of avatars that are further away, because the Quest was unable to handle 2400 detailed skinned avatars and provide adequate performance.

Presented the work at Alumni event in NYC

We presented the first scene on Quest VR headset at an Alumni event in New York City, in collaboration with CAUS and ICAT. Some alumni were *extremely* enthusiastic about the project. Some asked us if we had considered the military applications. Really. I’m not kidding.

ICAT 2019-2020 Major Sead Grant

-

-

ICAT Center

-

- ICAT Project

-

ICAT Project

-

-

Idea-Challenge

-

-

-

-

-

Research

-

-

-

Studios

-

-

General Item

The Cube

The CubeThe Cube is a highly adaptable space for research and experimentation in big data exploration, immersive environments, intimate performances, audio and visual installations, and experiential investigations of all types.