AuralSurface

Our research is testing the capabilities of an augmented reality design tool, for real-time, immersive data visualization experience within a virtual space. Our cross disciplinary team brings together an architect and interior designer (Matt Wagner), a digital interactive sound specialist (Ivica Ico Bukvic), and a data visualization artist (Dane Webster) who joined our team in September, to research how an immersive experience can provide multisensory feedback for the development of AuralSurface, a responsive surface that mitigates sound.

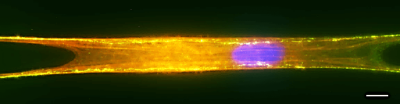

Conceptualized as a response to distractions in the workplace, AuralSurface is a responsive surface inspired by the need to control ambient noise created from everyday life in the office, while also serving as an adjustable separator that maintains a sense of open space. For workplace performance and comfort, the responsive surface optimizes interior environments by adapting to the changing reverberation rhythms of voices and footsteps. AuralSurface can also be parametrically calibrated to a desired acoustic setting, which allows for increasing or decreasing acoustic dampening depending on the needs of the interior space and its occupants.

As office cultures trend toward collaborative working environments with mobile workstations, office space designs are becoming increasingly open. There is a growing need to mitigate distracting and interrupting noise. AuralSurface seeks to reduce fatigue and stress in the workplace by controlling ambient noise disturbances. AuralSurface’s design allows it to autonomously deploy an acoustic material at specific locations when the perceived decibel levels are higher than normal. In addition to a physical response, the acoustic material may be seen as a visual cue once deployed. Those holding conversations may recognize this as a subtle sign to speak more softly or to take a conversation to another area.

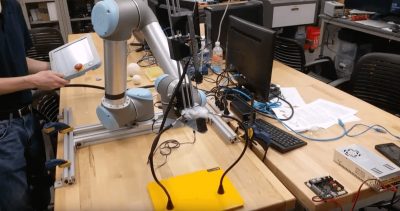

AuralSurface, a panelized surface, is an otherwise seemingly typical modular system. AuralSurface’s array responds to commands from an automated control system based on noise levels and proxemics, and can be operated manually by occupants with a smart phone or tablet interface. It can also be seen as an aesthetically pixelized scratch pad where users can doodle with various visual arrangements and consequently a desired privacy level (both visually and aurally).

For an experiential effect, panels can be programmed to create dynamic patterns across one single module or an entire surface. Programmed arrays can also provide an interactive response to human proximity, maintaining a one to one scale interface of human and system interaction.

With the introduction of our immersive tool (Microsoft HoloLens), we will be able to visualize data and metrics in real time, via augmented reality. This visualization tool will provide layers of graphic information overtop a virtual responsive surface. Project constraints have required our physical responsive surface prototypes to be limited in size. Through HoloLens’ virtual environment, we are able to view a digital prototype in a space at full size. The information seen and collected through HoloLens assists in the design refinement of our current digital prototype. The virtual environment provides a better understanding of how the responsive surface responds to multisensory input (proximity and sound), as well as how it performs in various spaces.