Live Action VR - Integrating Live Actors into Virtual Reality Experiences in Real Time

Our project aims to seamlessly blend real-time stereoscopic video capture of a live actor into a synthetic virtual environment and to build an interactive, branched narrative in which a live participant can engage and navigate towards different endings. To achieve this goal, we built a diverse team of faculty and students from various disciplines including Computer Science, Cinema, and Visual Arts.

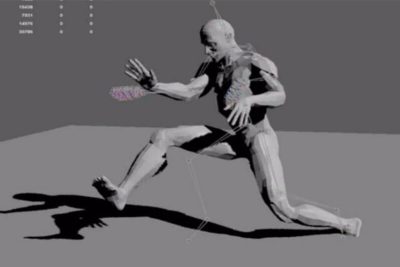

We distinguish synthetic humans from live action by the dominance of direct translation of image data to the virtual environment, rather than by means of an explicit reconstruction step (3d scanning, modeling, and texturing). In the first phase, we will build a stereoscopic camera rig and software framework for real-time visualization and compositing of actors into synthetic 3D environments. The environment will be visualized in a head-mounted display. We will use this platform to experiment with new narratives that combine techniques from cinema and theater with the immersion afforded by virtual environments. By using live-action characters, we expect to be able to raise the emotional connection with the users while offering the possibility of creating any imaginable setting. Through these efforts, we aim to help create a new mode of storytelling and human interaction -- which could offer tremendous parallel value in education, business, and many other fields.

Approach and methods

We have applied a holistic approach to the various intersecting disciplines our project incorporates -- Computer Science, Cinema, and Visual Arts -- moving from story, to technology, or visual arts. Our process has considered the limitations and opportunities of each of these areas, with the goal of creating an experience that significantly advances the possibilities of virtual reality.

One of the key components of our project is the technical integration of the live-action video and the synthetic virtual reality. We have been working to combine our Computer Science and Cinema expertise towards this end. Drawing from the Cinematic Arts, we built a dual-camera 3D live-action camera rig to record an HD video capture of a performer against the backdrop of a green screen. The 3D system will provide a more natural perception of depth and, hopefully, a more immersive experience for the participant. On the Computer Science side, we are continually improving a custom-built pipeline for streaming our HD video feed in real-time into a Unity-powered game engine, utilizing a special shader to remove the green screen from the video production. The ultimate goal is to make the performer indistinguishable from the virtual environment. This is an extremely challenging task, however, and it will require fine-tuning as we proceed, as well as thoughtful consideration of cinema production elements (e.g., designing a costume that covers the fine hairs of the actor, which are very difficult to shade; maintaining precise, separate lighting of both the green screen and the actor; etc.). Another challenge will be optimizing our system for low latency, so that it can be used in real-time inside our VR environment.

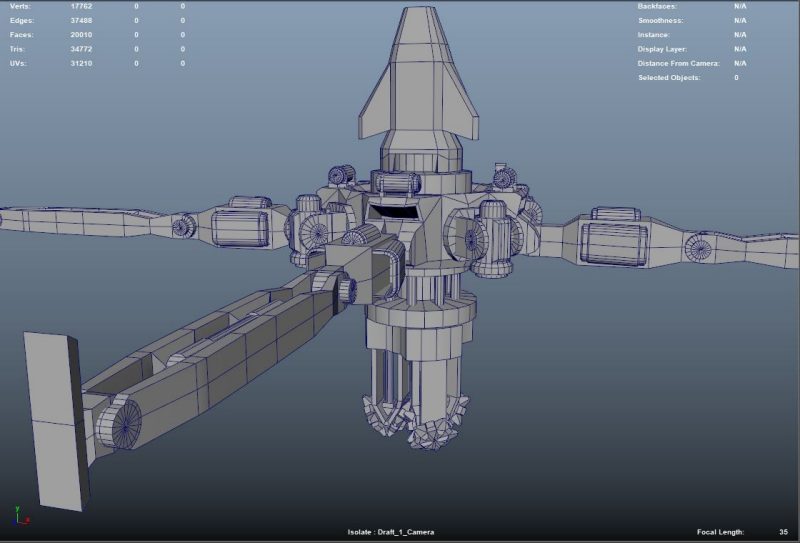

With regard to the Visual Design of the virtual world, we drew from the discipline of Visual Art and spent a lot of time creating 3D models in Maya of a spaceship cockpit, with an eye to realistic engineering of the craft. Eventually, we will design all the details of the cockpit, along with various views of the exterior world contained within our story, including: the surface of Europa (the smallest of Jupiter’s Galilean moons), where our story begins; a thick surface layer of ice, through which our spaceship drills and melts its way down; a region of dark ocean void, as we descend beneath the ice layer; and then a magical world full of bioluminescent sea creatures.

From this Visual Art and VR perspective, our goal is to create a virtual environment that looks and feels real. To achieve this goal, we will create a physical set that matches the virtual 3D models (in terms of its shape and size and texture -- not necessarily in terms of color, since the participant will be wearing a VR headset). In this way, the user can touch nearby objects that correspond to the virtual world, thereby significantly increasing presence. The visual design also takes into account restrictions of the capture process (for example a static camera) and narrative demands. Since the 3D world will have real-time images within it, we intend to leverage cinema techniques to physically light the actor so that it matches the virtual lights of the virtual scene.

Finally, our experience is an interactive narrative, in which the actor’s and participant’s interactions are fundamental to the development of the story. Our script has multiple decision points, which will be supported by a series of narrative controls and improvisational skills from the actor. The narrative controls are the software support for the narrative, allowing the director to activate events in response to the user’s actions during the experience. Some of these controls progress the narrative from pre-established decision points. Others are used as recovery mechanisms to bring the narrative back on track.

Our next steps include finalizing the branched-narrative script and casting our performer. We anticipate a highly iterative coordination between Performer, Writer, and Technology in order to deliver on such real-time, interactive storytelling.

Statement of innovation

Our proposal will allow the creation of compelling immersive narratives by sidestepping the traditional photorealistic character pipeline and allowing for real-time interplay between story characters and users in a way that is not currently possible. The state of the art creation of real-time virtual characters relies on a complex pipeline which involves: 1- the 3D scanning of the actor to capture geometry, texture, and reflectance fields. 2- mapping animation and captured data to a hand-optimized model. 3- computing and blending textures and poses to achieve real-time lighting and animation. Instead of digitally reconstructing characters, we propose filming them directly from the point-of-view required by the narrative. In addition, instead of scripted behavior, real-time streaming between the performer and the viewer will allow improvisation and natural conversation between them, increasing the sense of presence, intimacy, and user agency.

Acknowledgements

We are grateful to ICAT for its initial support of our project, and we hope the mini-SEAD investment will reap big dividends in the near future.

ICAT 2018-2019 Mini Sead Grant

-

-

ICAT Center

-

- ICAT Project

-

ICAT Project

-

-

Idea-Challenge

-

-

-

-

-

Research

-

-

-

Studios

-

%20(1).jpg.transform/s-medium/image.jpg)